Generative Adventures: Exploring the Frontiers with VAEs, GANs, and VAE-GANs

During my time in the applied machine learning course(CS441) at the University of Illinois at Urbana-Champaign, I embarked on an ambitious journey into the realm of advanced machine learning technologies. The course required a comprehensive understanding of various machine learning concepts, providing both breadth and depth in our studies. For my final optional project, I chose to specialize in some of the most intriguing areas of generative models: Variational AutoEncoders (VAEs), Denoising AutoEncoders, Generative Adversarial Networks (GANs), and the innovative hybrid, Variational Autoencoder Generative Adversarial Networks (VAE-GANs). Here, I provide a high-level overview of each technology and discuss the outcomes of my experiments. For further information about the course please see sample syllabus.

Variational AutoEncoders (VAEs)

VAEs are powerful generative models that use the principles of probability and statistics to produce new data points that are similar to the training data. Unlike traditional autoencoders, which aim to compress and decompress data, VAEs introduce a probabilistic twist to encode input data into a distribution over latent space. This approach not only helps in generating new data but also improves the model’s robustness and the quality of generated samples. VAEs are particularly effective in tasks where you need a deep understanding of the data’s latent structure, such as in image generation and anomaly detection.

The encoder in a VAE is responsible for transforming high-dimensional input data into a lower-dimensional and more manageable representation. However, unlike standard autoencoders that directly encode data into a fixed point in latent space, the encoder in a VAE maps inputs into a distribution over the latent space. This distribution is typically parameterized by means (mu) and variances (sigma), which define a Gaussian probability distribution for each dimension in the latent space.

The latent space in VAEs is the core feature that distinguishes them from other types of autoencoders. It is a probabilistic space where each point is defined not just by coordinates, but by a distribution over possible values. This stochastic nature of the latent space allows VAEs to generate new data points by sampling from these distributions, providing a mechanism to capture and represent the underlying probabilistic properties of the data. It essentially acts as a compressed knowledge base of the data’s attributes.

Once a point in the latent space is sampled, the decoder part of the VAE takes over to map this probabilistic representation back to the original data space. The decoder learns to reconstruct the input data from its latent representation, aiming to minimize the difference between the original input and its reconstruction. This process is governed by a loss function that has two components: a reconstruction loss that measures how effectively the decoder reconstructs the input data from the latent space, and a regularization term that ensures the distribution characteristics in the latent space do not deviate significantly from a predefined prior (often a standard normal distribution).

In practice, the encoder’s output of means and variances provides a smooth and continuous latent space, which is crucial for generating new data points that are similar but not identical to the original data. This property makes VAEs particularly useful in tasks requiring a deep generative model, such as synthesizing new images that share characteristics with a training set, or identifying anomalies by seeing how well data points reconstruct using the learned distributions.

Denoising AutoEncoders

Denoising Autoencoders (DAEs) are specialized neural networks aimed at improving the quality of corrupted input data by learning to restore its original, uncorrupted state. This functionality is crucial in applications such as image restoration, where DAEs enhance image clarity by effectively removing noise. They achieve this through a training process that involves a dataset containing pairs of noisy and clean images. By continually adjusting through this training set, the DAE learns the underlying patterns necessary to filter out the distortions and recover the clean data. This ability to directly process and improve corrupted data makes DAEs valuable for various tasks beyond image restoration, including audio cleaning and improving data quality for analytical purposes.

Source: https://blog.keras.io/building-autoencoders-in-keras.html

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) utilize a unique framework involving two competing neural networks: a generator and a discriminator. These networks engage in an adversarial game, where the generator’s goal is to create synthetic data that is indistinguishable from real-world data, effectively “fooling” the discriminator. The discriminator’s job, on the other hand, is to distinguish between the authentic data and the synthetic creations of the generator.

This dynamic creates a feedback loop where the generator continually learns from the discriminator’s ability to detect fakes, driving it to improve its data generation. As the generator gets better, the discriminator’s task becomes more challenging, forcing it to improve its detection capabilities. Over time, this adversarial process leads to the generation of highly realistic and convincing data outputs.

GANs have been particularly successful in the field of image generation, where they are used to create highly realistic images that are often indistinguishable from actual photographs. A prominent example is the ThisPersonDoesNotExistwebsite, which uses a model called StyleGAN2to generate lifelike images of human faces that do not correspond to real individuals. This technology has also been applied in other areas such as art creation, style transfer, and more. Eerie.

Source: https://developers.google.com/machine-learning/gan/gan_structure

Variational Autoencoder Generative Adversarial Networks (VAE-GANs)

VAE-GANs are an innovative hybrid model that synergistically combines Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) to enhance the quality and control of data generation. The model integrates the VAE’s capability for creating a compressed, latent representation of data with the GAN’s strength in generating high-fidelity outputs.

In a VAE-GAN system, the encoder part of the VAE compresses input data into a latent space (a condensed representation), which the GAN’s generator then uses to reconstruct outputs that are as realistic as possible. This setup leverages the VAE’s ability to manage and interpret the latent space effectively, providing a structured, meaningful input for the GAN’s generator. The discriminator in the GAN setup then evaluates these outputs against real data, guiding the generator to improve its outputs continually.

The fusion of these two models allows for a more controlled generation process, which can lead to higher quality outputs than what might be achieved by either model alone. This approach not only enhances the detail and realism of the generated data but also improves the model’s ability to learn diverse and complex data distributions, making VAE-GANs particularly useful in tasks that require a high level of detail and accuracy, such as in image generation and modification.

Source: Larsen, Anders & Sønderby, Søren & Winther, Ole. (2015). Autoencoding beyond pixels using a learned similarity metric.

Project Outcomes

The practical application of these models in my project yielded fascinating insights and results. For instance, the VAEs demonstrated an impressive ability to generate new images that closely resembled the original dataset, while the Denoising AutoEncoders more or less restored a significant portion of corrupted images to their original state. Similarly, the GANs produced images that were often indistinguishable from real ones, highlighting their potential in creating synthetic data for training other machine learning models without the need for extensive real-world data collection.

The VAE-GANs, however, were the highlight, combining the best aspects of their constituent models to generate supremely realistic and diverse outputs. While I am unable to share specific code snippets of the DAE/VAE due to copyright restrictions on the course content, the qualitative outcomes were highly encouraging and indicative of the powerful capabilities of hybrid generative models.

Results

Denoising AutoEncoder

As you can see in the image above, it does an ok job of denoising the middle image. The top image is the original image, the middle is the image with 50% noise, and the bottom is the model’s outputted denoised image. If I trained the model longer and varied up the training data I likely would have been able to get a better result. Additionally Principal Component Analysis and calculation of the Mean Residual Error was performed to determine how well the model works. See below:

Loss over Epochs(Note the plots say reconstruction error when I really meant Mean Squared Error Loss):

I decided to use a standard fixed learning rate here and only trained for 240 epochs.

Variational AutoEncoder

Here we were tasked with coming up with a VAE which would generate images and also be able to interpolate between images.

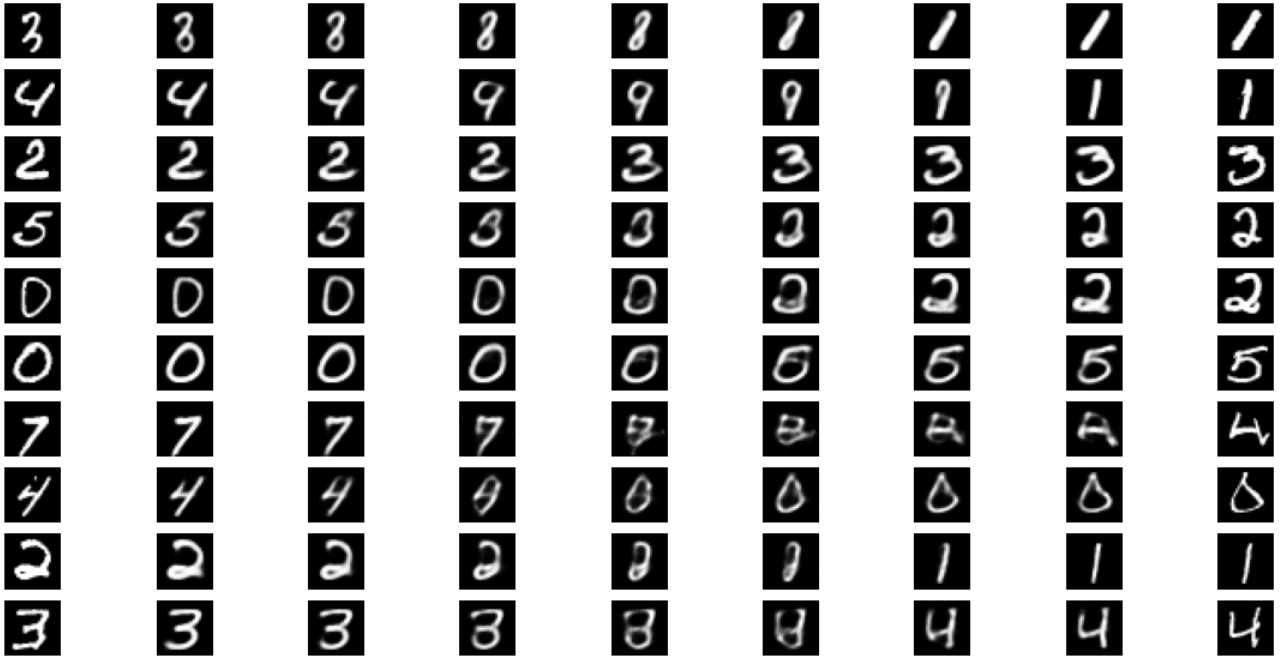

Same Digits:

Different Digits:

Original vs. Reconstructed:

As you can see the VAE did a fairly good job of generating images.

Loss over 400 Epochs:

I was playing around with progressively reducing the learning rate as parameters changed(or didn’t) and thus reduced the learning rate progressively. This actually seemed to result in the exponentialLR type scheduler funnily enough. See here. The model was trained for 400 epochs. I likely won’t spin the DAE/VAE back up for videos as I did for the GAN and VAE-GAN.

Generative Adversarial Network

I decided to go a bit further and try to get a Generative Adversarial Network running to generate new images of numbers. I went beyond the standard requirements of the course, but not too far as I didn’t want to “waste” too much time as there are newer technologies nowadays. Here’s the repo. There is a video below that shows the evolution of the training of the model. Additionally here’s the final result and video below:

Click to Play Video

Losses over 540 Epochs:

VAE-GAN

I finally went a bit further(probably too far) and decided to implement a VAE-GAN. There was a lot more balancing involved between the autoencoder portion and the generative portion and I was able to achieve a passable result, but definitely not worth the time and effort to balance parameters. It was strangely smoothed out, yet blurred where it mattered to generate the images.

Final result image and video below:

Click to Play Video

Here’s the associated Losses over 1000 Epochs(note my discriminator freaking out…):

Final Thoughts

Exploring these advanced generative models not only enhanced my understanding of the deep theoretical underpinnings of machine learning but also provided a practical toolkit for addressing complex real-world data generation and enhancement challenges. The knowledge and experience gained through this project are invaluable and have opened up numerous possibilities for further research and application in the field of artificial intelligence. I anticipate broadening my skillset in Generative AI here soon and will continue to skill up. I also got to experience the sheer tedium of “untangling” a neural network wherein something went wrong in my layers… repeatedly.