To begin with, we are using an ESP32-Wroom-32 Expressif board purchased from Microcenter for $10. These boards can be found for as low as $4-$5 on Amazon, and more capable boards are available in the $10-$20 range. This makes the ESP32 a cost-effective alternative to the Raspberry Pi, especially when the task is primarily logging sensor data. Additionally, the ESP32 outperforms the Raspberry Pi Pico significantly; it is approximately five times faster with integer arithmetic and 60-70 times faster with floating-point calculations, as demonstrated in this youtube video: https://www.youtube.com/watch?v=zGog29YNLmk&ab_channel=Tomsvideos

The code for this project is written in MicroPython. Although rewriting it in C/C++ could potentially yield a 10x performance improvement, the time and effort required to handle compilation issues are not justifiable for this use case. If this were a production environment, opting for C/C++ would be an obvious choice.

What is an ESP32-WROOM-32 Expressif Board?

The ESP32-WROOM-32 is a powerful, low-cost Wi-Fi and Bluetooth microcontroller module developed by Espressif Systems. It is designed for a wide range of applications, from simple IoT projects to complex systems requiring wireless connectivity and advanced processing capabilities. Here are some key features and specifications of the ESP32-WROOM-32 board:

Dual-Core Processor: The ESP32-WROOM-32 features a dual-core Tensilica Xtensa LX6 microprocessor, with clock speeds up to 240 MHz. This provides ample processing power for a variety of tasks, including real-time data processing and multitasking.

Wireless Connectivity:

Wi-Fi: The board supports 802.11 b/g/n Wi-Fi, making it ideal for IoT applications that require internet connectivity. It can operate in both Station and Access Point modes, allowing it to connect to existing networks or create its own.

Bluetooth: It includes Bluetooth 4.2 (BLE and Classic), enabling communication with other Bluetooth devices, such as sensors, smartphones, and peripherals.

Memory:

Flash Memory: The module typically comes with 4 MB of flash memory, used for storing the firmware and other data.

SRAM: It has 520 KB of on-chip SRAM, providing sufficient space for running programs and handling data.

GPIO and Peripherals:

The board features numerous General Purpose Input/Output (GPIO) pins, which can be used for interfacing with various sensors, actuators, and other peripherals.

It includes a variety of built-in peripherals, such as UART, SPI, I2C, PWM, ADC, and DAC, making it highly versatile for different types of projects.

Power Management:

- The ESP32-WROOM-32 is designed with power efficiency in mind, offering multiple power-saving modes, such as deep sleep and light sleep. This makes it suitable for battery-powered applications where low power consumption is crucial.

Development Environment:

- The board is compatible with popular development environments like Arduino IDE, PlatformIO, and Espressif’s own ESP-IDF (Espressif IoT Development Framework). This flexibility allows developers to choose their preferred tools and programming languages.

The ESP32-WROOM-32 board is widely used in various applications, including Internet of Things (IoT), industrial automation, home automation, robotics, health monitoring, and educational projects. It enables the creation of smart home devices, environmental monitoring systems, wearable health trackers, and remote industrial monitoring solutions. Additionally, it is suitable for controlling autonomous robots and drones, developing smart appliances, and building voice assistants. Its versatility makes it an excellent choice for learning and prototyping in STEM education, providing a hands-on experience with microcontrollers, IoT, and embedded systems.

However, the ESP32-WROOM-32 has some limitations that need to be considered. Its processing power and memory are limited compared to more powerful systems, which can be a constraint for complex applications. While it offers various power-saving modes, its power consumption is higher than simpler microcontrollers, making it less ideal for ultra-low-power applications. The board’s real-time performance may not meet the needs of highly time-sensitive tasks, and its limited GPIO pins might require additional hardware for larger projects. Additionally, it may not be suitable for extreme environmental conditions without protective measures, and its complexity can present a steep learning curve for beginners.

The datasheet for the ESP32 can be found here.

CPU and Internal Memory

ESP32-D0WDQ6 contains two low-power Xtensa® 32-bit LX6 microprocessors. The internal memory includes:

448 KB of ROM for booting and core functions.

520 KB of on-chip SRAM for data and instructions.

8 KB of SRAM in RTC, which is called RTC FAST Memory and can be used for data storage; it is accessed by the main CPU during RTC Boot from the Deep-sleep mode.

8 KB of SRAM in RTC, which is called RTC SLOW Memory and can be accessed by the co-processor during the Deep-sleep mode.

1 Kbit of eFuse: 256 bits are used for the system (MAC address and chip configuration) and the remaining 768 bits are reserved for customer applications, including flash-encryption and chip-ID.

Get the esptool via pip:

See usage:

Next find your port after plugging in your ESP32 device via USB:

In Windows at least its Device Manager -> Ports(Com & LPT) and look for a device named USB-Serial CH340 or Silicon Labs CP210x USB to UART Bridge or similar. I had two devices in my ports so I noted the ports in use, unplugged the board, then plugged it back in to get the port. This of course didn’t work and I had to add the COM port manually…

Adding COM ports manually.

Open Device Manager on your computer.

Click on the Action option from menu bar.

Choose Add legacy hardware from the menu to open the Add Hardware window.

Click on the Next button to move on.

Check Install the hardware that I manually select from a list (Advanced) and press Next.

Select Ports (COM & LPT) from the given list and press the Next button.

Choose Standard port types option or the manufacturer for the ports; then, click Next.

Click on the Finish button to complete.

You’ll note a new COM port, in my case COM4 and that’s what you’ll need for the next step.

Follow this guide if you’re not seeing things or some other nonsense: https://docs.espressif.com/projects/esp-idf/en/stable/esp32/get-started/establish-serial-connection.html

If you’re experiencing driver issues, this resource might help:

https://www.silabs.com/developers/usb-to-uart-bridge-vcp-drivers?tab=overview

I used the “with serial enumeration” file, and it worked well for me. The device was recognized and assigned to COM4, which I then used for my setup.

After installing PuTTY, everything worked smoothly. Using both the drivers and PuTTY resolved my issues, reminding me to be more patient and consult the documentation before rushing. If you’ve already flashed something, follow the steps to reset while monitoring COM# on PuTTY. You should see the download mode activate. Reset the device by holding the Boot button and pressing the reset button, then holding Boot while flashing.

Getting Started with MicroPython

For the most part refer to the instructions at: https://docs.micropython.org/en/latest/esp32/tutorial/intro.html

Download firmware for your ESP32 board:

https://micropython.org/download/#esp32

Specifically the Microcenter Inland WROOM Board:

https://micropython.org/download/ESP32_GENERIC/

Ensure your device is erased with:

Take care to replace the ‘COM4’ with your port.

Next flash MicroPython to the board:

Make sure you replace the .bin file with the file you downloaded and ensure you’re in the correct directory.

Next I got up and running with Thonny(https://thonny.org/). Its a very lightweight Python IDE that’s ESP32 friendly. Make sure you select your device in the lower right corner and you’ll be up and running.

You can run a simple print(“Hello World”) to ensure you’re communicating with the device in the shell.

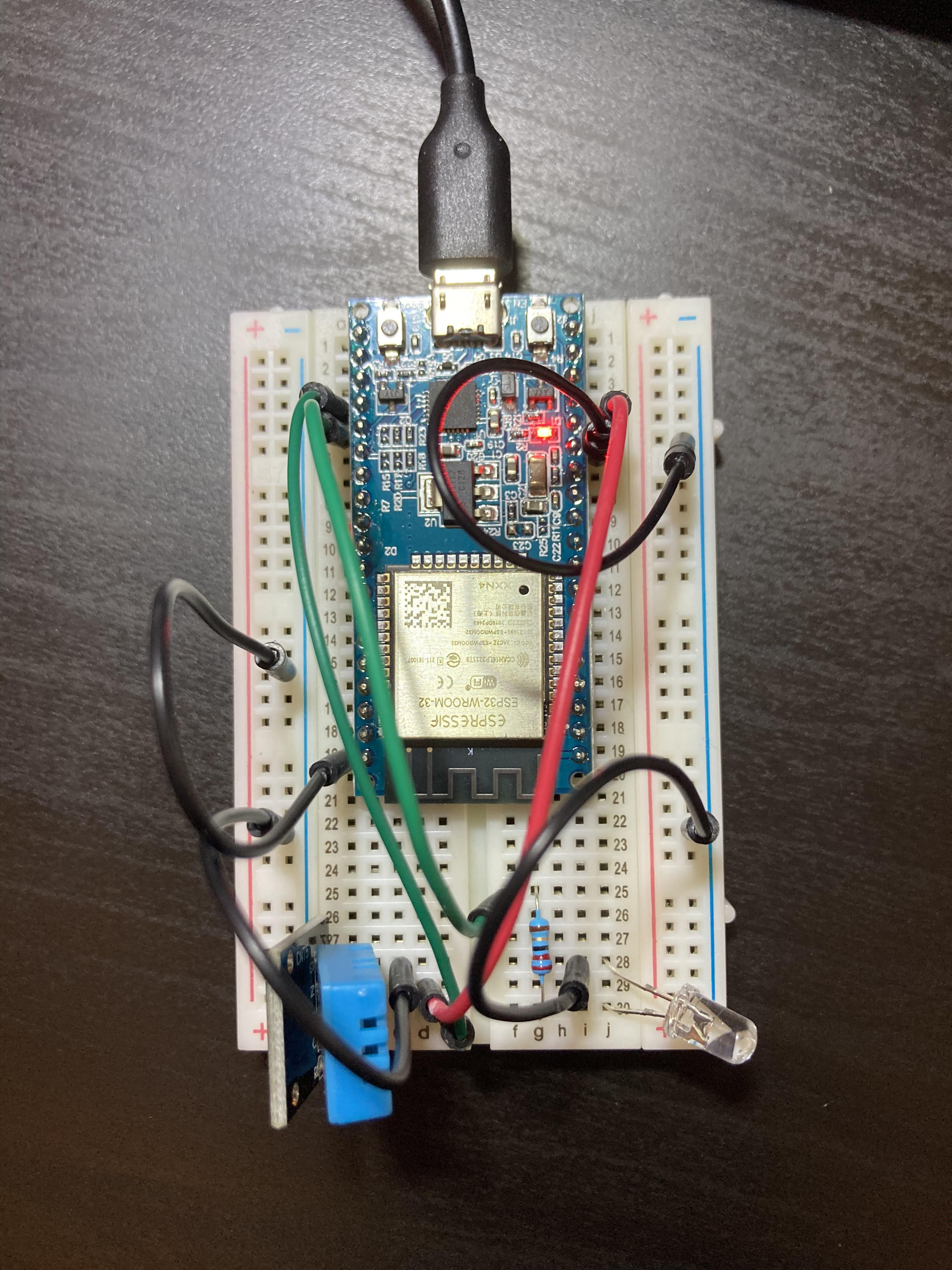

Here’s a schematic of my board setup, with swappable GPIO pins and an optional LED. I included an LED to provide a quick visual indicator that my board is running the code correctly during stress tests.

And the Real Life Version:

Pinouts courteousy of https://github.com/natedogg2020/Inland_ESP32-Supplements

Top:

Bottom:

All pin references are looking from the top and will be either referenced Top Left or Top Right.

DHT11

Ground to Ground Line to Ground at Pin 19(Top Left)

GPIO15 Power from Pin 4(Top Left)

GPIO13 Signal from Pin 5(Top Left)

GPIO Power from Pin 5(Top Right)(I know I should’ve kept it split, but I had to do some nonsense with the power after the wifi cut out due to the power demand)

LED

Resistor: 22ohm(Also used 10, but 22 works better to not blind me)

LED Anode to Ground to Ground Line to Ground at Pin 6(Top Right)

LED Cathode to Resistor(22 ohm) to GPIO2 Power from Pin 5(Again, I know I should’ve kept it separate. I’m not an EE.)

Next, you’ll want to test that your device can connect to the internet, read the sensor, and blink the LED. For now, we’ll skip the Google App Script/Vercel PostgreSQL/MongoDB/ThingSpeak integration, and you will encounter errors when transmitting data.

I’ll provide the code below with explanations of the different parts. To save memory, especially with the Google Apps Script request, I used a lower-level form of POST than standard. This approach is necessary because the Google Response was overloading the memory. Adjust the timeouts according to your needs. Initially, disable the sensors and LED to ensure sufficient power for the wireless connection, then enable the sensors and allow a few seconds for them to register readings.

Code

import network

import urequests as requests

import time as time_module

from machine import Pin

import dht

import ntptime

import utime

import ujson as json

import gc

from machine import freq

# Wi-Fi credentials

SSID = 'YOUR-WIFI-NAME'

PASSWORD = 'YOUR-WIFI-PASSWORD'

# Google Sheets settings

SPREADSHEET_ID = 'YOUR-SPREADSHEET-ID'

RANGE_NAME = 'Sheet1!A1:E1' #NOTE THAT THIS IS FOR DATE TIME HUMIDITY TEMP_F TEMP_C. WILL CHANGE COLUMN DESIGNATION IF YOU ADD/REMOVE DATA.

SHEET_NAME = 'Sheet1'

GOOGLE_URL = 'YOUR-GOOGLE-APP-SCRIPT'

# ThingSpeak settings

THINGSPEAK_API_KEY = 'THINGSPEAK-API-KEY'

THINGSPEAK_URL = 'https://api.thingspeak.com/update'

THINGSPEAK_CHANNEL_ID = 0000000 # Replace with your ThingSpeak channel ID

THINGSPEAK_BULK_UPDATE_URL = 'https://api.thingspeak.com/channels/'+str(THINGSPEAK_CHANNEL_ID)+'/bulk_update.json'

# MongoDB settings

MONGODB_API_URL = 'YOUR_MONGO_DB_URL'

#MONGODB_API_KEY = 'your_mongodb_api_key' #WE ARE NOT USING PYMONGO, DO NOT NEED.

MONGODB__VERCEL_API_URL = 'YOUR-VERCEL-URL/api/sensorMongoDB'

# Vercel settings

VERCEL_API_URL = 'YOUR-VERCEL-URL/api/sensor'

VERCEL_API_KEY = 'YOUR-VERCEL-API-KEY'

# DHT11 sensor setup

SENSOR_POWER_PIN = 13 # Change this to the pin connected to the power control of the sensor

SENSOR_DATA_PIN = 15 # Change this to the pin connected to the data pin of the sensor

LED_PIN = 2 # GPIO pin for the LED

# Initialize the sensor power pin and LED pin

sensor_power_pin = Pin(SENSOR_POWER_PIN, Pin.OUT)

sensor_data_pin = Pin(SENSOR_DATA_PIN)

led = Pin(LED_PIN, Pin.OUT)

# Buffers to store data

data_buffer_vercel = []

data_buffer_mongodb = []

thingspeak_buffer = [] # Buffer for ThingSpeak data

# Control flags

SEND_TO_VERCEL = True

SEND_TO_GOOGLE_SHEETS = True

SEND_TO_THINGSPEAK = True

SEND_TO_MONGODB = True

def connect_wifi(ssid, password):

wlan = network.WLAN(network.STA_IF)

wlan.active(True)

wlan.connect(ssid, password)

while not wlan.isconnected():

time_module.sleep(1)

print("Connecting to WiFi...")

print("Connected to WiFi")

print(wlan.ifconfig())

# Function to disable sensors

def disable_sensors():

sensor_power_pin.value(0) # Turn off sensor by setting the power pin low

# Function to enable sensors

def enable_sensors():

sensor_power_pin.value(1) # Turn on sensor by setting the power pin high

time_module.sleep(2) # Wait for the sensor to stabilize

# Function to get system status

def get_system_status(firstRun):

free_heap = gc.mem_free()

total_heap = gc.mem_alloc() + free_heap

free_heap_percent = (free_heap / total_heap) * 100

if firstRun == True:

print(f"Total heap memory: {total_heap} bytes")

# Additional information about the system

print(f"Frequency: {freq()} Hz")

print(f"Free heap memory: {free_heap} bytes ({free_heap_percent:.2f}%)")

def get_time_chicago():

max_retries = 100

for attempt in range(max_retries):

try:

ntptime.settime()

current_time = utime.localtime()

break

except OSError as e:

print(f"Failed to get NTP time, attempt {attempt + 1} of {max_retries}. Error: {e}")

time_module.sleep(1)

else:

print("Could not get NTP time, proceeding without time synchronization.")

return utime.localtime()

# Determine if it is daylight saving time (DST)

month = current_time[1]

day = current_time[2]

hour = current_time[3]

if (month > 3 and month < 11) or (month == 3 and day >= 8 and hour >= 2) or (month == 11 and day < 1 and hour < 2):

is_dst = True

else:

is_dst = False

offset = -6 * 3600 if not is_dst else -5 * 3600

local_time = utime.mktime(current_time) + offset

return utime.localtime(local_time)

def read_sensor():

try:

led.off()

sensor = dht.DHT11(sensor_data_pin)

time_module.sleep(1) #the DHT11 sensor takes 1 second

sensor.measure()

led.on()

temp = sensor.temperature()

hum = sensor.humidity()

return temp, hum

except OSError as e:

print("Failed to read sensor. Exception: ", e)

return None, None

# Read the access token from the file uploaded earlier

def read_access_token():

with open('access_token.txt', 'r') as token_file:

return token_file.read().strip()

# ACCESS_TOKEN = read_access_token()

# print(ACCESS_TOKEN)

import usocket as socket

import ssl

def send_data_to_google_sheets(temp_c, temp_f, humidity, time_str, date_str):

# print(time_module.time())

url = GOOGLE_URL # Define your Google URL here

headers = {

'Content-Type': 'application/x-www-form-urlencoded'

}

data = {

'Date': date_str,

'Time': time_str,

'Humidity %': humidity,

'Temp F': temp_f,

'Temp C': temp_c

}

# print(time_module.time())

# Construct the URL-encoded string manually

encoded_data = (

"Date=" + date_str +

"&Time=" + time_str +

"&Humidity %=" + str(humidity) +

"&Temp F=" + str(temp_f) +

"&Temp C=" + str(temp_c)

)

try:

# Extract host and path from URL

_, _, host, path = url.split('/', 3)

# Set up a socket connection

addr = socket.getaddrinfo(host, 443)[0][-1]

s = socket.socket()

s.connect(addr)

s = ssl.wrap_socket(s)

# Create the HTTP request manually

request = f"POST /{path} HTTP/1.1\r\nHost: {host}\r\n"

request += "Content-Type: application/x-www-form-urlencoded\r\n"

request += f"Content-Length: {len(encoded_data)}\r\n\r\n"

request += encoded_data

# Send the request

s.write(request)

# # Read the response

# response = s.read(1024) # Read up to 2048 bytes from the response, THIS TAKES A WHILE, SET TO WHATEVER. 128-1024

# print('Data sent to Google Sheets:', response)

# Close the socket

s.close()

print('Data sent to Google Sheets!')

# print(time_module.time())

except Exception as e:

print('Failed to send data to Google Sheets:', e)

# # Function to send data to Google Sheets

# def send_data_to_google_sheets(temp_c, temp_f, humidity,time_str,date_str):

# print(time_module.time())

# url = GOOGLE_URL

# headers = {

# 'Content-Type': 'application/x-www-form-urlencoded'

# }

# # Construct the URL-encoded string manually

# print(time_module.time())

# encoded_data = (

# "Date=" + date_str +

# "&Time=" + time_str +

# "&Humidity %=" + str(humidity) +

# "&Temp F=" + str(temp_f) +

# "&Temp C=" + str(temp_c)

# )

# print(encoded_data)

# print(time_module.time())

# print("request")

# try:

# # Get initial free memory

# # Run garbage collection to get a clean slate

# gc.collect()

# initial_free = gc.mem_free()

# response = requests.post(url, data=encoded_data, headers=headers) #DRAGS... Also

# # Run garbage collection again

# gc.collect()

# # Get final free memory

# final_free = gc.mem_free()

#

# # Calculate memory used by the variable

# memory_used = initial_free - final_free

# print('Data sent to Google Sheets:')

# print(time_module.time())

# print('Size of response: ', memory_used, 'bytes') # Size of response: 46352 bytes. Crazy.... That's like ~4635 date strings.

# except Exception as e:

# print('Failed to send data to Google Sheets:', e)

def send_data_to_thingspeak():

"""Send data to ThingSpeak."""

if SEND_TO_THINGSPEAK and thingspeak_buffer:

if len(thingspeak_buffer) > 1:

# Bulk update

payload = {

'write_api_key': THINGSPEAK_API_KEY,

'updates': []

}

for data in thingspeak_buffer:

update = {

'created_at': f"{data['date']} {data['time']} -0500",

'field1': data['temperature_C'],

'field2': data['temperature_F'],

'field3': data['humidityPercent'],

'field4': data['time'],

'field5': data['date']

}

payload['updates'].append(update)

try:

# Send the bulk update request to ThingSpeak

headers = {'Content-Type': 'application/json'}

#print(len(thingspeak_buffer))

#print(headers)

#print(json.dumps(payload))

# Convert the data payload to JSON format

json_data = json.dumps(payload)

response = requests.post(THINGSPEAK_BULK_UPDATE_URL,headers=headers,data=json_data)

if response.status_code == 202:

print('Data posted to ThingSpeak (bulk update):', response.text)

thingspeak_buffer.clear() # Clear the buffer after successful update

else:

print(f'Failed to send data to ThingSpeak (bulk update): {response.status_code}, {response.text}')

except Exception as e:

print('Failed to send data to ThingSpeak (bulk update):', e)

else:

data = thingspeak_buffer.pop(0) # Get the first item in the buffer

print(data)

payload = {

'api_key': THINGSPEAK_API_KEY,

'field1': data['temperature_C'],

'field2': data['temperature_F'],

'field3': data['humidityPercent'],

'field4': data['time'],

'field5': data['date']

}

try:

response = requests.post(THINGSPEAK_URL, json=payload)

print('Data posted to ThingSpeak', response.text)

print(payload)

thingspeak_buffer.clear() # Clear the buffer after successful update

except Exception as e:

print('Failed to send data to ThingSpeak:', e)

thingspeak_buffer.clear()

def send_data_to_mongodb():

url = MONGODB__VERCEL_API_URL

headers = {

'x-api-key': VERCEL_API_KEY,

'Content-Type': 'application/json'

}

try:

# Convert the data dictionary to a JSON string

json_data = json.dumps(data_buffer_mongodb)

# Print the request details for debugging

# print("Sending data to:", url)

# print("Headers:", headers)

# print("Payload:", json_data)

response = requests.post(url, data=json_data, headers=headers)

# Print the response details for debugging

print("Status Code:", response.status_code)

print("Response Text:", response.text)

data_buffer_mongodb.clear()

except Exception as e:

print("Failed to send data to Vercel MongoDB API:", e)

data_buffer_mongodb.clear()

def send_data_to_vercel():

url = VERCEL_API_URL

headers = {

'x-api-key': VERCEL_API_KEY,

'Content-Type': 'application/json'

}

try:

# Convert the data dictionary to a JSON string

json_data = json.dumps(data_buffer_vercel)

# Print the request details for debugging

# print("Sending data to:", url)

# print("Headers:", headers)

# print("Payload:", json_data)

response = requests.post(url, data=json_data, headers=headers)

# Print the response details for debugging

print("Status Code:", response.status_code)

print("Response Text:", response.text)

except Exception as e:

print("Failed to send data to Vercel:", e)

def main():

firstRun = True

enable_sensors()

temp,hum=read_sensor()

print(temp,hum)

led.off()

disable_sensors()

connect_wifi(SSID, PASSWORD)

enable_sensors()

last_google_sheets_update = time_module.time()

last_thingspeak_update = time_module.time()

last_vercel_update = time_module.time()

last_mongodb_update = time_module.time()

iter = 0

while True:

try:

led.on()

enable_sensors() #enable the sensors via GPIO

temp_c, humidity = read_sensor() #log readings

led.off()

disable_sensors()# disable the sensors so wifi transmission doesn't run into power issues.

if temp_c is not None and humidity is not None:

temp_f = temp_c * 9 / 5 + 32

local_time = get_time_chicago()

date_str = f"{local_time[0]}-{local_time[1]:02d}-{local_time[2]:02d}"

time_str = f"{local_time[3]:02d}:{local_time[4]:02d}:{local_time[5]:02d}"

print(f'[{iter}]Date: {date_str}, Time: {time_str}, Temperature: {temp_c}°C, Humidity: {hum}%, Temperature: {temp_f}°F ')

# print(date_str)

# print(time_str)

# Add data to buffers

data = {

'date': date_str,

'time': time_str,

'humidityPercent': humidity,

'temperatureFahrenheit': temp_f,

'temperatureCelsius': temp_c

}

data_buffer_vercel={

'date': date_str,

'time': time_str,

'humidityPercent': humidity,

'temperatureFahrenheit': temp_f,

'temperatureCelsius': temp_c

}

thingspeak_buffer.append({

'temperature_C': temp_c,

'temperature_F': temp_f,

'humidityPercent': humidity,

'time': time_str,

'date': date_str

})

data_buffer_mongodb.append({

'date': date_str,

'time': time_str,

'humidityPercent': humidity,

'temperatureFahrenheit': temp_f,

'temperatureCelsius': temp,

})

print("ThingSpeak Buffer:",len(thingspeak_buffer),"|Vercel Buffer:",len(data_buffer_vercel),"|MongoDB Buffer:",len(data_buffer_mongodb))

# Check if it's time to send data to Google Sheets

if time_module.time() - last_google_sheets_update >= 5:

send_data_to_google_sheets(temp_c, temp_f, humidity, time_str, date_str)

last_google_sheets_update = time_module.time()

# Check if it's time to send data to ThingSpeak

if time_module.time() - last_thingspeak_update >= 15:

send_data_to_thingspeak()

last_thingspeak_update = time_module.time()

# # Check if it's time to send data to Vercel

#Vercel DB no good for this low level stuff. Overflow error and out of memory.

#No append operation as a result.

if time_module.time() - last_vercel_update >= 3600:

send_data_to_vercel()

last_vercel_update = time_module.time()

# Check if it's time to send data to MongoDB

if time_module.time() - last_mongodb_update >= 15:

send_data_to_mongodb()

last_mongodb_update = time_module.time()

led.on()

# time_module.sleep(1) # Wait for 1 seconds before logging the next reading. Note sensor sampling times!

led.off()

get_system_status(firstRun)

print(" ")

firstRun = False

iter = iter+1

except Exception as e:

print(f"Error in main loop: {e}") # usually some one off memory error. It'll reset while still connected to wifi and everyone will be happy.

if __name__ == '__main__':

main()And a breakdown:

Code breakdown

Import Necessary Libraries

First, we import the necessary libraries required for the project:

networkfor managing Wi-Fi connectivity.urequestsfor making HTTP requests to various APIs.timeandutimefor handling time-related functions.dhtfor interacting with the DHT11 sensor.ntptimefor synchronizing time with an NTP server.ujsonfor handling JSON data.gcfor garbage collection to manage memory.machinefor controlling hardware components like GPIO pins.

Wi-Fi and API Credentials

We define constants to store Wi-Fi credentials and API details:

SSIDandPASSWORDfor Wi-Fi network credentials.SPREADSHEET_ID,RANGE_NAME,SHEET_NAME, andGOOGLE_URLfor Google Sheets integration.THINGSPEAK_API_KEY,THINGSPEAK_URL,THINGSPEAK_CHANNEL_ID, andTHINGSPEAK_BULK_UPDATE_URLfor ThingSpeak integration.MONGODB_API_URL,MONGODB_VERCEL_API_URLfor MongoDB integration.VERCEL_API_URLandVERCEL_API_KEYfor Vercel integration.

Setting Up GPIO Pins

We configure the GPIO pins on the ESP32:

SENSOR_POWER_PINto control the power to the DHT11 sensor.SENSOR_DATA_PINto read data from the DHT11 sensor.LED_PINto control an LED used for indicating status.

Data Buffers and Control Flags

Buffers are initialized to temporarily store data before sending it to the respective services:

data_buffer_vercel,data_buffer_mongodb, andthingspeak_bufferstore data for Vercel, MongoDB, and ThingSpeak, respectively.

Control flags (SEND_TO_VERCEL, SEND_TO_GOOGLE_SHEETS, SEND_TO_THINGSPEAK, SEND_TO_MONGODB) determine whether data should be sent to each service.

Connecting to Wi-Fi

The connect_wifi function manages the connection to the Wi-Fi network:

Activates the WLAN interface.

Connects to the specified Wi-Fi network using the provided SSID and password.

Continuously checks the connection status and prints the IP configuration once connected.

Sensor Control Functions

Two functions manage the power state of the DHT11 sensor:

disable_sensorssets the power pin low to turn off the sensor.enable_sensorssets the power pin high and waits for the sensor to stabilize.

System Status Function

The get_system_status function provides insights into the system’s memory usage and CPU frequency:

Calculates the total and free heap memory.

Prints the memory statistics and CPU frequency.

Time Synchronization

The get_time_chicago function synchronizes the ESP32’s clock with an NTP server:

Attempts to set the time using NTP up to a maximum number of retries.

Adjusts the time based on whether daylight saving time (DST) is in effect for the Chicago timezone.

Reading Sensor Data

The read_sensor function reads temperature and humidity data from the DHT11 sensor:

Measures the temperature and humidity.

Returns the values or

Noneif the reading fails.

Sending Data to Google Sheets

The send_data_to_google_sheets function sends sensor data to Google Sheets:

Constructs the data payload and URL-encodes it.

Sends the data using an HTTP POST request.

Handles errors during the data sending process.

Sending Data to ThingSpeak

The send_data_to_thingspeak function sends data to ThingSpeak:

Supports both single data point updates and bulk updates.

Constructs the payload and sends it using an HTTP POST request.

Handles errors and clears the buffer after successful updates.

Sending Data to MongoDB via Vercel API

The send_data_to_mongodb function sends data to a MongoDB instance via a Vercel API:

Converts the data buffer to JSON.

Sends the data using an HTTP POST request.

Handles errors and clears the buffer after successful updates.

Sending Data to Vercel API

The send_data_to_vercel function sends data to a Vercel API endpoint:

Converts the data buffer to JSON.

Sends the data using an HTTP POST request.

Handles errors during the data sending process(NO BUFFER DUE TO MEMORY LIMITATIONS AND VERCEL LIMITS).

Main Function

The main function orchestrates the entire process:

Initializes the sensor and connects to Wi-Fi.

Enters an infinite loop where it periodically reads sensor data, stores it in buffers, and sends it to the configured services.

Controls the LED to indicate the status of operations.

Manages the timing of data sending to ensure that each service receives data at the specified intervals.

Logs system status and handles errors in the main loop.

Upon encountering an error, most likely memory related, begins the loop again.

Test the code and verify that your circuit is functioning correctly. After that, configure Vercel or another API endpoint and Google App Script. Configuration details for Vercel can be found at: https://jesse-anderson.github.io/Blog/_site/posts/Pi-Sensor-Proj-May-2024/, so I’ll skip that part. Create the JavaScript file(sensorMongoDB.js) below and place it in your /api folder. Ensure all changes are pushed to GitHub.

Code

const { MongoClient } = require('mongodb');

const API_KEY = process.env.API_KEY; // Retrieve the API key from environment variables

const MONGO_URI = process.env.MONGODB_URI; // MongoDB connection string from environment variables

const MONGODB_DB_NAME = 'Raspberry_Pi'; // Database name

const MONGODB_COLLECTION_NAME = 'Readings'; // Collection name

let client;

const connectToMongo = async () => {

if (!client) {

client = new MongoClient(MONGO_URI, {

useNewUrlParser: true,

useUnifiedTopology: true,

});

await client.connect();

}

return client.db(MONGODB_DB_NAME);

};

const handleSensorData = async (req, res) => {

if (req.method !== 'POST') {

return res.status(405).json({ error: 'Method not allowed' });

}

try {

console.log('Request received'); // For debugging purposes

// Extract API key from request headers

const providedApiKey = req.headers['x-api-key'];

console.log('Provided API Key:', providedApiKey); // For debugging purposes

// Check if API key is provided and matches the expected API key

if (!providedApiKey || providedApiKey !== API_KEY) {

return res.status(401).json({ error: 'Unauthorized' });

}

// Extract data from request body

const data = req.body;

// Log the data received to console for verification

console.log('Received data:', JSON.stringify(data, null, 2));

const db = await connectToMongo();

const collection = db.collection(MONGODB_COLLECTION_NAME);

let result;

if (Array.isArray(data)) {

// Insert multiple readings

result = await collection.insertMany(data.map(entry => ({

...entry,

temperatureCelsius: entry.temperatureCelsius !== null && entry.temperatureCelsius !== undefined ? entry.temperatureCelsius : 0,

temperatureFahrenheit: entry.temperatureFahrenheit !== null && entry.temperatureFahrenheit !== undefined ? entry.temperatureFahrenheit : 0,

humidityPercent: entry.humidityPercent !== null && entry.humidityPercent !== undefined ? entry.humidityPercent : 0,

date: entry.date !== null && entry.date !== undefined ? entry.date : new Date().toISOString().split('T')[0],

time: entry.time !== null && entry.time !== undefined ? entry.time : new Date().toISOString().split('T')[1].split('.')[0]

})));

} else {

// Insert a single reading

result = await collection.insertOne({

temperatureCelsius: data.temperatureCelsius !== null && data.temperatureCelsius !== undefined ? data.temperatureCelsius : 0,

temperatureFahrenheit: data.temperatureFahrenheit !== null && data.temperatureFahrenheit !== undefined ? data.temperatureFahrenheit : 0,

humidityPercent: data.humidityPercent !== null && data.humidityPercent !== undefined ? data.humidityPercent : 0,

date: data.date !== null && data.date !== undefined ? data.date : new Date().toISOString().split('T')[0],

time: data.time !== null && data.time !== undefined ? data.time : new Date().toISOString().split('T')[1].split('.')[0]

});

}

console.log('Data stored in MongoDB:', result);

// Send a successful response back to the client

res.status(200).json({ message: 'Data received and stored successfully!', data: result });

} catch (e) {

// Handle errors and send an error response

console.error("Error connecting to MongoDB or inserting data:", e);

res.status(500).json({ error: 'Failed to connect to database or insert data', details: e.message });

}

};

// Export the function for Vercel

module.exports = handleSensorData;I’ll omit breaking down the code as it is similar enough to the code described in the earlier post.

Setting up Google Apps Script

To set up Google Apps Script, create a new project in Google App Script. Generate a new Google Sheet named ‘ESP32’ or a name of your choice. Populate the first row with “Date | Time | Humidity % | Temp F | Temp C.” Then, go to Extensions -> Apps Script and create a new script. Enter the code below and run the setup function by selecting it and clicking the play button.

Code

// 1. Enter sheet name where data is to be written below

var SHEET_NAME = "Sheet1";

var SCRIPT_PROP = PropertiesService.getScriptProperties(); // new property service

function doGet(e) {

return handleResponse(e);

}

function doPost(e) {

return handleResponse(e);

}

function handleResponse(e) {

Logger.log("Request received: " + JSON.stringify(e.parameter));

var lock = LockService.getPublicLock();

lock.waitLock(500); // wait 0.5 seconds before conceding defeat. Try 30 with more complicated

//We are essentially trying to prevent a race condition in which we have concurrent writes to the same spreadsheet. For this simple 1 python post to 1 spreadsheet this is essentially a non issue. Set to higher values if developing more complex nonsense.

try {

var docId = SCRIPT_PROP.getProperty("key");

Logger.log("Using document ID: " + docId);

var doc = SpreadsheetApp.openById(docId);

var sheet = doc.getSheetByName(SHEET_NAME);

var headRow = e.parameter.header_row || 1;

var headers = sheet.getRange(1, 1, 1, sheet.getLastColumn()).getValues()[0];

var nextRow = sheet.getLastRow() + 1; // get next row

var row = [];

for (var i in headers) {

if (headers[i] == "Timestamp") {

row.push(new Date());

} else {

row.push(e.parameter[headers[i]]);

}

}

sheet.getRange(nextRow, 1, 1, row.length).setValues([row]);

var output = JSON.stringify({"result": "success", "row": nextRow});

Logger.log("Data written to row: " + nextRow);

if (e.parameter.callback) {

return ContentService.createTextOutput(e.parameter.callback + "(" + output + ");")

.setMimeType(ContentService.MimeType.JAVASCRIPT);

} else {

return ContentService.createTextOutput(output)

.setMimeType(ContentService.MimeType.JSON);

}

} catch (error) {

Logger.log("Error: " + error.toString());

var output = JSON.stringify({"result": "error", "error": error.toString()});

if (e.parameter.callback) {

return ContentService.createTextOutput(e.parameter.callback + "(" + output + ");")

.setMimeType(ContentService.MimeType.JAVASCRIPT);

} else {

return ContentService.createTextOutput(output)

.setMimeType(ContentService.MimeType.JSON);

}

} finally {

lock.releaseLock();

}

}

function setup() {

var doc = SpreadsheetApp.getActiveSpreadsheet();

SCRIPT_PROP.setProperty("key", doc.getId());

Logger.log("Script property 'key' set to: " + doc.getId());

}Code Summary

1. Setting Up Sheet Name and Script Properties

First, the script defines the name of the sheet where data will be written. It also initializes a property service to store script properties, which can be useful for managing script configurations and data across different executions.

2. Handling HTTP Requests

The script includes functions to handle GET and POST requests. These functions delegate the request handling to a common function called handleResponse, which simplifies the code by centralizing the request processing logic.

3. Processing Incoming Requests

The core of the script is the handleResponse function, which processes incoming HTTP requests. This function:

Logs the Request: It logs the received parameters for debugging purposes, providing visibility into the incoming data.

Locks the Sheet: Uses a public lock to prevent race conditions, ensuring that only one instance of the script writes to the sheet at a time. This is crucial for avoiding data corruption when multiple requests are processed simultaneously.

Accesses the Spreadsheet: Retrieves the Google Sheet document ID from script properties, opens the sheet, and selects the specified sheet by name.

Prepares the Data Row: Reads the headers from the first row of the sheet and constructs a new row of data based on the incoming request parameters. If a header is “Timestamp”, it adds the current date and time.

Writes Data to the Sheet: Writes the constructed row to the next available row in the sheet and logs the row number where data was written.

Generates the Response: Constructs a JSON response indicating success and the row number. If a JSONP callback is specified, it formats the response accordingly.

Handles Errors: Catches any errors that occur during the process, logs the error, and returns a JSON response indicating failure.

Releases the Lock: Ensures that the lock is released at the end of the function, whether it completes successfully or encounters an error.

4. Setting Up the Script

A setup function is included to configure the script properties. It retrieves the active spreadsheet’s ID and stores it in the script properties, allowing the handleResponse function to access the correct document.

After running setup you will get a key in the Execution log:

Make sure to check that this key matches your google sheet spreadsheet ID:

Next, go to the top right corner of the screen, select New deployment, populate the description, choose “Execute As” “Me,” and set “Anyone” under “Who has access.” Deploy the project, and you will receive a Deployment ID and Web App URL. Copy the Web App URL, which you will use for sending data.

The web app URL should be of the following form:

Running the Program with Data Logging

At this point, everything should be set up and ready for data logging. Run the program, and it should connect to Wi-Fi, enable the sensors, and start posting to your databases. Be aware that buffer sizes can cause errors, so I kept them small. One can increase the buffer size up to the point where you begin to get memory errors(the program will throw an exception and retry the data upload loop). Using an SD card for local logging is an option, but I haven’t tried it yet. It could involve logging locally, then using a local web server to push data as needed.

Flashing the Program

Next we put the ESP32 into download mode by holding boot and pressing reset and then saving the Thonny file to the ESP32 as main.py. After this test that your program is running by unplugging and plugging the ESP32 back in and listening to the COM4 port on Putty or observing the LED. Once you’ve verified this go back into Thonny and select File->Open->MicroPython device->boot.py. Simply type import main as a new line and the program should boot every time you are connected to power. Note that this is a nuclear option and generally main.py should run on power up. From here you should be able to move the board wherever you want within your home or even hand solder the connection points with something like this:

https://www.amazon.com/Adafruit-Accessories-Perma-Proto-Full-Breadboard/dp/B00SK8KAMM

Use good wire and solder!

A general diagram of the flow for the ESP32 is below:

We will make a few changes to the Google Apps Script so we can access the Sheet concurrently with the data logging:

Code

// 1. Enter sheet name where data is to be written below

var SHEET_NAME = "Sheet1";

var SCRIPT_PROP = PropertiesService.getScriptProperties(); // new property service

function doGet(e) {

Logger.log("doGet called with parameters: " + JSON.stringify(e.parameter));

if (e.parameter.action && e.parameter.action === 'getLastRow') {

Logger.log("Calling getLastRowData function");

return getLastRowData();

} else if (e.parameter.action && e.parameter.action === 'getLast6000Rows') {

Logger.log("Calling getLast6000Rows function");

return getLast6000Rows();

} else {

Logger.log("Invalid action or no action specified");

return ContentService.createTextOutput(JSON.stringify({ error: "Invalid action or no action specified" })).setMimeType(ContentService.MimeType.JSON);

}

}

function doPost(e) {

Logger.log("doPost called with parameters: " + JSON.stringify(e.parameter));

return handleResponse(e);

}

function handleResponse(e) {

var action = e.parameter.action;

Logger.log("handleResponse called with action: " + e.parameter);

if (action === 'getLastRow') {

Logger.log("Calling getLastRowData function");

return getLastRowData();

} else if (action === 'getLast6000Rows') {

Logger.log("Calling getLast6000Rows function");

return getLast6000Rows();

} else {

try {

return handleDataSubmission(e);

} catch (error) {

Logger.log("Error in handleDataSubmission: " + error.message);

return ContentService.createTextOutput(JSON.stringify({ error: "Invalid action or no action specified" })).setMimeType(ContentService.MimeType.JSON);

}

}

}

function handleDataSubmission(e) {

Logger.log("handleDataSubmission called with parameters: " + JSON.stringify(e.parameter));

var lock = LockService.getPublicLock();

lock.waitLock(500); // wait 0.5 seconds before conceding defeat. Try 30 with more complicated

//We are essentially trying to prevent a race condition in which we have concurrent writes to the same spreadsheet. For this simple 1 python post to 1 spreadsheet this is essentially a non issue. Set to higher values if developing more complex nonsense.

try {

var docId = SCRIPT_PROP.getProperty("key");

Logger.log("Using document ID: " + docId);

var doc = SpreadsheetApp.openById(docId);

var sheet = doc.getSheetByName(SHEET_NAME);

var headRow = e.parameter.header_row || 1;

var headers = sheet.getRange(1, 1, 1, sheet.getLastColumn()).getValues()[0];

var nextRow = sheet.getLastRow() + 1; // get next row

var row = [];

for (var i in headers) {

if (headers[i] == "Timestamp") {

row.push(new Date());

} else {

row.push(e.parameter[headers[i]]);

}

}

sheet.getRange(nextRow, 1, 1, row.length).setValues([row]);

var output = JSON.stringify({"result": "success", "row": nextRow});

Logger.log("Data written to row: " + nextRow);

if (e.parameter.callback) {

return ContentService.createTextOutput(e.parameter.callback + "(" + output + ");")

.setMimeType(ContentService.MimeType.JAVASCRIPT);

} else {

return ContentService.createTextOutput(output)

.setMimeType(ContentService.MimeType.JSON);

}

} catch (error) {

Logger.log("Error: " + error.toString());

var output = JSON.stringify({"result": "error", "error": error.toString()});

if (e.parameter.callback) {

return ContentService.createTextOutput(e.parameter.callback + "(" + output + ");")

.setMimeType(ContentService.MimeType.JAVASCRIPT);

} else {

return ContentService.createTextOutput(output)

.setMimeType(ContentService.MimeType.JSON);

}

} finally {

lock.releaseLock();

}

}

function setup() {

var doc = SpreadsheetApp.getActiveSpreadsheet();

SCRIPT_PROP.setProperty("key", doc.getId());

Logger.log("Script property 'key' set to: " + doc.getId());

}

function getLastRowData() {

try {

Logger.log("getLastRowData function called");

// Open the spreadsheet by ID and get the specified sheet

var sheet = SpreadsheetApp.openById(SCRIPT_PROP.getProperty("key")).getSheetByName(SHEET_NAME);

Logger.log('Sheet accessed successfully');

// Get the last row number

var lastRow = sheet.getLastRow();

Logger.log('Last row number: ' + lastRow);

// Get the values of the last row

var lastRowData = sheet.getRange(lastRow, 1, 1, sheet.getLastColumn()).getValues()[0];

Logger.log('Last row data: ' + JSON.stringify(lastRowData));

// Get the headers from the first row

var headers = sheet.getRange(1, 1, 1, sheet.getLastColumn()).getValues()[0];

Logger.log('Headers: ' + JSON.stringify(headers));

// Create an object to store the last row data

var result = {};

// Populate the result object with the headers as keys and last row data as values

headers.forEach(function(header, index) {

if (header === 'Date') {

result[header] = formatDate(lastRowData[index], "America/Chicago");

} else if (header === 'Time') {

result[header] = formatTime(lastRowData[index], "America/Chicago");

} else {

result[header] = lastRowData[index];

}

});

Logger.log('Result object: ' + JSON.stringify(result));

// Convert the result object to JSON

var json = JSON.stringify(result);

Logger.log('JSON output: ' + json);

// Ensure ContentService is defined and working

if (typeof ContentService === 'undefined') {

throw new Error('ContentService is not defined.');

}

// Return the JSON output

var output = ContentService.createTextOutput(json).setMimeType(ContentService.MimeType.JSON);

Logger.log('Output object created successfully.');

Logger.log('Output object: ' + output.getContent());

return output;

} catch (error) {

Logger.log('Error: ' + error.message);

return ContentService.createTextOutput(JSON.stringify({ error: error.message })).setMimeType(ContentService.MimeType.JSON);

}

}

function formatDate(dateString, timeZone) {

var date = new Date(dateString);

return Utilities.formatDate(date, timeZone, "yyyy-MM-dd");

}

function formatTime(timeString, timeZone) {

var date = new Date(timeString);

return Utilities.formatDate(date, timeZone, "hh:mm:ss a");

}

function getLast6000Rows() {

try {

Logger.log("getLast6000Rows function called");

var sheet = SpreadsheetApp.openById(SCRIPT_PROP.getProperty("key")).getSheetByName(SHEET_NAME);

Logger.log('Sheet accessed successfully');

var lastRow = sheet.getLastRow();

Logger.log('Last row number: ' + lastRow);

var startRow = lastRow > 6000 ? lastRow - 5999 : 1; // Adjust the start row if there are fewer than 6000 rows

Logger.log('Start row number: ' + startRow);

var numRows = lastRow - startRow + 1;

Logger.log('Number of rows to fetch: ' + numRows);

var dataRange = sheet.getRange(startRow, 1, numRows, sheet.getLastColumn());

var data = dataRange.getValues();

Logger.log('Fetched data: ' + JSON.stringify(data));

var headers = sheet.getRange(1, 1, 1, sheet.getLastColumn()).getValues()[0];

Logger.log('Headers: ' + JSON.stringify(headers));

var result = data.map(function(row) {

var rowObject = {};

headers.forEach(function(header, index) {

if (header === 'Date') {

rowObject[header] = formatDate(row[index], "America/Chicago");

} else if (header === 'Time') {

rowObject[header] = formatTime(row[index], "America/Chicago");

} else {

rowObject[header] = row[index];

}

});

return rowObject;

});

Logger.log('Result object: ' + JSON.stringify(result));

var json = JSON.stringify(result);

Logger.log('JSON output: ' + json);

var output = ContentService.createTextOutput(json).setMimeType(ContentService.MimeType.JSON);

Logger.log('Output object created successfully.');

Logger.log('Output object: ' + output.getContent());

return output;

} catch (error) {

Logger.log('Error: ' + error.message);

return ContentService.createTextOutput(JSON.stringify({ error: error.message })).setMimeType(ContentService.MimeType.JSON);

}

}And here’s a visual of what’s going on:

And here’s the requests form to see the data:

Latest sensor reading Google Sheets(button loads latest data):

Google Sheets Data Display

| Date | Time | Humidity % | Temp F | Temp C |

|---|

Latest Sensor Reading ThingSpeak(press button):

ThingSpeak Data Display

| Date | Time | Humidity % | Temp F | Temp C |

|---|

Latest Sensor Reading MongoDB(Read-Only User):

MongoDB Data Display

| Date | Time | Humidity % | Temp F | Temp C |

|---|

Latest Sensor Reading Vercel PostgreSQL(Read-Only User):

Vercel Data Display

| Date | Time | Humidity % | Temp F | Temp C |

|---|

Finally, as before in this post: https://jesse-anderson.github.io/Blog/_site/posts/Pi-Sensor-Part-2/ We have a plot of the last 6000 or so values from Google this time. Note the changes in Google Apps Script above to make this possible…

Pi Environment Test Data

Moving forward I’ll likely add an LCD display, enclosure and more sensors, but the main point of this exercise was to become more familiar with the simpler, cost effective IoT devices. Additionally extending Google Apps script’s functionality to include pulling data for plotting is tremendously useful for anyone who doesn’t want to pay for ThingSpeak.

Other thoughts:

Getting the sensor to reliably take readings was a pain. Slightly moving the sensor caused readings to fail and no data to be transmitted.

- Moving beyond a simple breadboard and hand soldering connections makes sense, but only for production. If that were the case, the odd 4-8 hours of getting a C/C++ version of this code up and running would make sense.

Google Apps Script is awesome and its usually on me if something breaks. The fact that I can log about four-ish years worth of data at 1 log/min

2 million rows at 5 entries per row / 60 entries per hour / 24 hours per year / 365.25 days per year = 3.8 years

At 2 logs/min or 30 seconds per log: 1.9 years.

The amount of processing power/memory and power(wattage) delivered to an ESP32 forced me to re evaluate the order of operations at several steps. Notably I spent some time debugging the wifi until I tried selectively powering things on or off. I also found that the program would randomly crash during POSTs and it would be the size of the response received that would cause a memory error.

With the previous post most of the leg work in setting up the web interfaces was already taken care of so all I had to do was the wiring, some rewriting of Python code(notably Google Sheets sending), and overhauling the Google Apps script. Luckily I finally moved away from ThingSpeak for plotting/data acquistion for plotting and now I grab directly from Google. Its a little bit longer now and I could halve the time by grabbing less data. 6,000 data points is a lot and eventually, at a 30 second sampling time, that’s nearly 50 hours of data with each plot.

A breakout board would have sped up figuring out the circuit before placing everything down. I picked one up for a Pi Pico I’m tinkering with for a plant project and an Adafruit Pi Cobbler(basically a breakout board) for a raspberry pi and I notice the faster reference time is nice. It would likely be best to have a high quality breakout board alongside a final proto board to make sure the design sticks and loose connections don’t hurt me.